The digital marketing landscape is experiencing a seismic shift. While traditional Search Engine Optimization (SEO) has been the cornerstone of online visibility for decades, a new player has entered the arena: Generative Engine Optimization (GEO). This revolutionary approach is fundamentally changing how we think about content optimization and user engagement.

Have you ever wondered why your carefully crafted SEO strategy isn’t delivering the results it used to? You’re not alone. The rise of AI-powered search engines and generative technologies is reshaping the rules of the game, making it crucial for marketers to understand both traditional SEO and the emerging GEO landscape.

What is Traditional SEO and Why Has It Dominated Digital Marketing?

The Foundation of Search Engine Optimization

Traditional SEO has been the backbone of digital marketing since the early days of the internet. It’s like being the most popular kid in school – everyone wants to sit at your table, but you need to follow certain rules to maintain that status. SEO focuses on optimizing websites and content to rank higher in search engine results pages (SERPs), primarily targeting algorithms used by Google, Bing, and other search engines.

The fundamental principle behind traditional SEO is straightforward: create content that search engines can easily understand, crawl, and index. This involves optimizing various elements including keywords, meta descriptions, title tags, backlinks, and technical aspects like site speed and mobile responsiveness. Think of it as speaking the search engine’s language fluently.

Traditional SEO operates on the premise that users type specific queries into search engines and expect to receive a list of relevant web pages. The goal is to appear as high as possible in these results, ideally in the coveted top three positions that capture the majority of clicks.

Key Components of Traditional SEO Strategies

Traditional SEO encompasses several critical components that work together like a well-orchestrated symphony. On-page optimization involves crafting content around target keywords, optimizing meta tags, and ensuring proper heading structure. Off-page SEO focuses on building authority through quality backlinks and social signals.

Technical SEO addresses the behind-the-scenes elements that affect how search engines crawl and index your site. This includes optimizing site architecture, improving page load speeds, ensuring mobile compatibility, and implementing structured data markup. Content marketing within traditional SEO emphasizes creating valuable, keyword-rich content that satisfies user intent while appealing to search algorithms.

Local SEO has become increasingly important for businesses with physical locations, involving optimization for location-based searches and Google My Business profiles. These components have formed the foundation of successful digital marketing campaigns for years, but the landscape is rapidly evolving.

Understanding Generative Engine Optimization (GEO): The New Frontier

Defining GEO in the AI-Powered Era

Generative Engine Optimization represents a paradigm shift in how we approach search optimization. Instead of optimizing for traditional search results, GEO focuses on optimizing content for AI-powered generative engines that provide direct answers rather than lists of links. It’s like having a knowledgeable assistant who can instantly synthesize information from multiple sources to provide comprehensive answers.

GEO recognizes that modern users increasingly prefer getting immediate, complete answers rather than clicking through multiple websites. This approach optimizes content to be easily understood and utilized by AI systems that generate responses based on vast amounts of training data and real-time information processing.

The core philosophy behind GEO is fundamentally different from traditional SEO. While SEO aims to drive traffic to your website, GEO focuses on ensuring your content becomes part of the AI-generated responses that users receive. This means optimizing for visibility within AI-generated content rather than traditional search rankings.

How Generative AI is Reshaping Search Results

Generative AI engines are transforming the search experience by providing synthesized, conversational responses instead of traditional blue links. These systems can understand context, interpret nuanced queries, and generate comprehensive answers that draw from multiple sources simultaneously. It’s like having access to a research team that can instantly compile and synthesize information on any topic.

The technology behind generative search involves sophisticated natural language processing models that can understand context, intent, and nuance in ways that traditional search algorithms cannot. These systems can handle complex, multi-part queries and provide detailed explanations, step-by-step instructions, or comparative analyses without requiring users to visit multiple websites.

This shift represents a fundamental change in user behavior and expectations. People are becoming accustomed to receiving immediate, detailed answers rather than conducting their own research across multiple sources. This trend is accelerating as AI-powered search tools become more sophisticated and widely adopted.

Core Differences Between GEO and Traditional SEO

Content Creation and Optimization Approaches

The content creation philosophies between GEO and traditional SEO are as different as night and day. Traditional SEO content is often optimized around specific keywords and phrases, with careful attention to keyword density, placement, and semantic variations. The goal is to signal relevance to search engine crawlers while maintaining readability for human users.

GEO content, on the other hand, prioritizes comprehensive coverage, authority, and clarity. Instead of focusing on specific keywords, GEO content aims to be the most complete and accurate source of information on a topic. This means creating content that answers related questions, provides context, and demonstrates deep expertise in the subject matter.

Traditional SEO content often follows specific formulas – optimal word counts, keyword placement rules, and structured formatting requirements. GEO content is more flexible and focuses on natural language patterns that AI systems can easily understand and synthesize. This approach emphasizes storytelling, detailed explanations, and comprehensive coverage of topics.

User Intent and Query Processing

Traditional SEO targets specific search queries and keyword combinations that users might type into search engines. Marketers conduct extensive keyword research to identify high-volume, low-competition terms and optimize content accordingly. This approach works well for users who know exactly what they’re looking for and how to search for it.

GEO addresses a broader spectrum of user intent, including conversational queries, complex questions, and exploratory searches. Users interacting with generative AI engines often ask questions in natural language, seek explanations, or request comparisons and analyses. This requires content that can address multiple angles and provide comprehensive information.

The query processing mechanisms also differ significantly. Traditional search engines match keywords and analyze relevance signals to rank pages. Generative engines understand context, interpret intent, and synthesize information from multiple sources to create original responses that directly address user needs.

Ranking Factors and Algorithm Considerations

Traditional SEO ranking factors are well-documented and include elements like domain authority, backlink quality, content relevance, technical performance, and user engagement signals. These factors have been refined over decades of search engine evolution and form the basis of most SEO strategies.

GEO operates on different principles entirely. Instead of ranking web pages, generative engines evaluate content based on its accuracy, comprehensiveness, authority, and usefulness for answer generation. The focus shifts from individual page optimization to building comprehensive, authoritative content collections that AI systems can trust and utilize.

Authority and expertise become even more critical in GEO. Generative engines prioritize content from sources that demonstrate deep knowledge and accuracy. This means that brand reputation, author credentials, and content quality carry more weight than traditional ranking signals like exact keyword matches or backlink quantity.

Traditional SEO: Strengths and Current Applications

Proven Strategies That Still Work

Traditional SEO isn’t dead – it’s evolving. Many fundamental SEO principles remain highly effective, particularly for certain types of searches and business models. Technical optimization, site architecture, and user experience continue to play crucial roles in digital success, regardless of how search technology evolves.

Link building and domain authority remain important signals for establishing credibility and expertise. While the specific mechanics may change, the underlying principle of building trust and authority through quality connections and citations continues to be valuable. Local SEO strategies are particularly resilient, as location-based searches often require traditional ranking approaches.

Content optimization for featured snippets and knowledge panels represents an area where traditional SEO strategies align well with generative engine requirements. Creating clear, structured content that answers specific questions directly benefits both traditional search rankings and AI-powered response generation.

When Traditional SEO Remains Most Effective

Traditional SEO continues to excel in several key areas. E-commerce websites often benefit more from traditional SEO approaches, as product searches frequently result in traditional search results rather than AI-generated responses. Users looking to make purchases typically want to compare options, read reviews, and visit specific retailer websites.

Local businesses with physical locations should maintain strong traditional SEO foundations. Local search results, Google My Business optimization, and location-based queries often bypass generative responses in favor of traditional map results and business listings. This makes traditional local SEO strategies essential for brick-and-mortar businesses.

Industries with highly regulated content or those requiring specific legal disclaimers may find traditional SEO more suitable. Financial services, healthcare, and legal sectors often need to direct users to official websites rather than relying on AI-generated summaries that might lack necessary legal protections or disclaimers.

GEO Advantages: Why Marketers Are Making the Switch

Enhanced User Experience Through AI Integration

GEO delivers superior user experiences by providing immediate, comprehensive answers without requiring users to navigate multiple websites. This approach eliminates the frustration of endless clicking and searching, offering a streamlined path to information. Users can engage in natural conversations with AI systems, asking follow-up questions and receiving clarified explanations.

The personalization capabilities of generative engines far exceed those of traditional search. AI systems can adapt responses based on user context, previous interactions, and specific needs. This creates more relevant, tailored experiences that traditional search results cannot match. Users receive information that’s not just accurate but specifically relevant to their situation.

Accessibility improvements represent another significant advantage. Generative engines can explain complex topics in simple terms, provide information in multiple formats, and adapt to different learning styles. This democratizes access to information and makes complex subjects more approachable for diverse audiences.

Improved Content Relevance and Personalization

GEO enables unprecedented levels of content personalization. Unlike traditional SEO, which serves the same content to all users searching for specific keywords, generative engines can tailor responses based on individual context and needs. This means your content can be presented differently to different users while maintaining its core value and accuracy.

The relevance improvements extend beyond simple keyword matching. Generative engines understand semantic relationships, context, and user intent in sophisticated ways. This allows for more nuanced content optimization that focuses on meaning and value rather than specific keyword combinations.

Content created with GEO principles often performs better across multiple search scenarios. Instead of optimizing separate pages for different keyword variations, GEO content can address multiple related queries within a single, comprehensive piece. This efficiency reduces content maintenance overhead while improving overall visibility.

Technical Implementation: GEO vs SEO Strategies

Keyword Research Evolution in the GEO Era

Keyword research for GEO requires a fundamental shift in thinking. Instead of focusing on specific search terms, GEO keyword research emphasizes topic clusters, semantic relationships, and question patterns. This involves identifying the comprehensive range of information users might seek about a topic rather than targeting individual keywords.

Traditional keyword tools remain useful but require different interpretation for GEO applications. Volume and competition metrics become less important than topic comprehensiveness and authority potential. Long-tail keywords and question-based queries become more valuable as they align with conversational AI interaction patterns.

Intent analysis becomes more sophisticated in GEO keyword research. Understanding the full spectrum of user motivations, from initial awareness through decision-making, helps create content that addresses complete user journeys rather than individual search moments. This comprehensive approach builds stronger topical authority.

Content Structure and Format Optimization

GEO content structure prioritizes clarity, comprehensiveness, and logical flow. Unlike traditional SEO content that might target specific keyword densities, GEO content focuses on providing complete, accurate information that AI systems can easily understand and synthesize. This means using clear headings, logical progression, and comprehensive coverage of topics.

Formatting for GEO emphasizes readability for both human users and AI systems. This includes using descriptive headings, bullet points for complex information, and clear topic transitions. The goal is creating content that AI engines can easily parse and integrate into generated responses while maintaining engagement for human readers.

Schema markup and structured data become even more important in GEO implementation. These elements help AI systems understand content context, relationships, and authority. Proper implementation of structured data can significantly improve the likelihood of content being utilized in AI-generated responses.

Measuring Success: Analytics and KPIs

Traditional SEO Metrics That Matter

Traditional SEO success metrics focus on visibility and traffic generation. Organic search rankings, click-through rates, and website traffic remain important indicators of SEO performance. These metrics provide clear, quantifiable measures of search engine visibility and user engagement with your content.

Conversion tracking in traditional SEO emphasizes the customer journey from search to website visit to desired action. This includes measuring bounce rates, time on page, and conversion rates from organic search traffic. These metrics help understand how effectively SEO efforts translate into business results.

Backlink analysis and domain authority tracking continue to be relevant for understanding competitive positioning and content credibility. These metrics provide insights into how well your content establishes authority within your industry and how it compares to competitor content.

New GEO Performance Indicators

GEO metrics require new approaches to success measurement. Instead of focusing solely on website traffic, GEO success involves tracking how often your content appears in AI-generated responses, the accuracy of information presentation, and the context in which your content is referenced.

Brand mention tracking becomes crucial in GEO analytics. Since users might receive information from your content without visiting your website, monitoring how frequently your brand or expertise is referenced in AI responses provides insights into your content’s influence and reach.

Authority scoring takes on new dimensions in GEO measurement. This involves tracking how AI systems perceive your content’s credibility and expertise. Metrics might include citation frequency in AI responses, accuracy ratings, and the breadth of topics where your content serves as a primary source.

Industry Impact and Future Predictions

How Major Search Engines Are Adapting

Major search engines are rapidly integrating generative AI capabilities into their platforms. Google’s integration of AI-powered features, Microsoft’s Bing Chat implementation, and emerging AI-first search engines are reshaping the competitive landscape. These changes represent fundamental shifts in how search results are generated and presented to users.

The transformation isn’t just about adding AI features to existing search engines. New platforms are emerging that prioritize conversational interactions and comprehensive answer generation over traditional link-based results. This evolution is creating new opportunities for content creators who understand how to optimize for these emerging platforms.

Search engine algorithms are becoming more sophisticated in understanding context, intent, and content quality. This evolution favors comprehensive, authoritative content over keyword-optimized pages designed primarily for traditional search algorithms. The focus is shifting toward genuine expertise and user value.

The Timeline for GEO Adoption

GEO adoption is accelerating rapidly across industries and user demographics. Early adopters are already seeing benefits from optimizing content for AI-powered search experiences. The timeline for widespread adoption varies by industry, with technology, education, and information-heavy sectors leading the transition.

Consumer behavior changes are driving faster GEO adoption than many experts predicted. Younger demographics, in particular, are embracing AI-powered search tools and expecting immediate, comprehensive answers. This behavioral shift is pressuring businesses to adapt their content strategies accordingly.

The integration timeline suggests that hybrid approaches combining traditional SEO and GEO strategies will dominate the next few years. Organizations that begin implementing GEO principles now will have significant advantages as the technology becomes more widespread and sophisticated.

Challenges and Limitations of Each Approach

Traditional SEO Obstacles in Modern Search

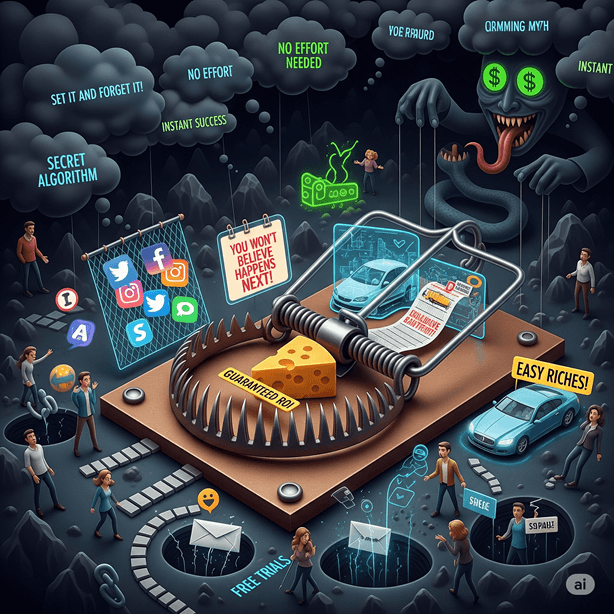

Traditional SEO faces increasing challenges as user expectations and search technologies evolve. Algorithm updates are becoming more frequent and unpredictable, making it difficult to maintain consistent rankings using traditional optimization techniques. The focus on user experience and content quality over technical optimization tricks has made many traditional SEO tactics less effective.

Competition for traditional search rankings has intensified dramatically. High-value keywords now require substantial resources and expertise to rank effectively. This increased competition, combined with evolving user behaviors, is making traditional SEO less accessible for smaller businesses and newer websites.

The rise of zero-click searches, where users get answers directly from search results without visiting websites, is reducing the traffic generation potential of traditional SEO. This trend challenges the fundamental value proposition of driving website visits through search optimization.

GEO Implementation Hurdles

GEO implementation faces its own set of challenges, primarily related to the newness of the technology and lack of established best practices. Unlike traditional SEO, which has decades of documented strategies and case studies, GEO requires experimentation and adaptation as the technology evolves.

Measuring GEO success can be complex because traditional analytics tools aren’t designed to track AI-generated content inclusion. This creates challenges in demonstrating ROI and optimizing strategies based on performance data. New measurement approaches and tools are still being developed.

The authority requirements for GEO can be more stringent than traditional SEO. AI systems often prioritize established, highly credible sources over newer content. This can create barriers for emerging brands or websites trying to build authority in competitive spaces.

Making the Right Choice for Your Business

Factors to Consider When Choosing Your Strategy

Choosing between GEO and traditional SEO depends on several critical factors. Your target audience’s search behavior plays a crucial role – if your users primarily use traditional search engines and prefer visiting websites for detailed information, traditional SEO might remain your primary focus. However, if your audience embraces AI-powered tools and values immediate answers, GEO becomes essential.

Industry considerations also influence strategy selection. Technical, educational, and information-heavy industries often benefit more from GEO approaches because users frequently seek comprehensive explanations and detailed answers. E-commerce and service-based businesses might find traditional SEO more effective for driving direct conversions.

Resource availability impacts implementation feasibility. GEO requires different skill sets and content approaches compared to traditional SEO. Organizations with limited resources might need to prioritize one approach initially while building capabilities for the other over time.

Hybrid Approaches: Combining GEO and SEO

The most effective modern digital marketing strategies combine elements of both GEO and traditional SEO. This hybrid approach acknowledges that different users have different preferences and that various types of content serve different purposes within the customer journey.

Hybrid strategies involve creating comprehensive, authoritative content that performs well in both traditional search results and AI-generated responses. This requires balancing keyword optimization with natural language patterns, maintaining technical SEO best practices while ensuring content is easily understood by AI systems.

Implementation of hybrid approaches often involves content tiering – creating detailed, comprehensive pieces optimized for GEO while maintaining traditional SEO pages for specific conversion goals. This strategy maximizes visibility across different search environments while maintaining clear business objectives.

Conclusion

The battle between Generative Engine Optimization and traditional SEO isn’t really a competition – it’s an evolution. While traditional SEO provided the foundation for digital marketing success over the past two decades, GEO represents the next phase of this journey. Smart marketers aren’t choosing between these approaches; they’re learning to leverage both strategically.

The future belongs to those who can adapt quickly and understand their audience’s evolving needs. Whether you focus on traditional SEO, embrace GEO, or implement a hybrid approach, success depends on creating genuinely valuable content that serves your users’ needs. The tools and techniques may change, but the fundamental principle of providing value remains constant.

As we move forward, the organizations that thrive will be those that view this transition as an opportunity rather than a threat. By understanding both approaches and implementing them strategically, you can build a robust digital presence that performs well regardless of how search technology continues to evolve.

READ ALSO: Understanding Answer Engine Optimization (AEO) Made Simple